Buffering … ⏳

The fact is, despite four decades of evolving technology, video conferencing is a sort of low-level magic that still konks out half the time. Here’s why.

Hey all, Ernie here with a recent piece I wrote for Motherboard about conference calls, something that I’m sure many of you have learned to live with over the past couple of weeks. We’re keeping our coronavirus coverage a bit more subdued and minimal, by the way, because you’re hearing about it from everywhere else.

“Many video conferences are a terrible waste of money and provide no more benefit from audio conferencing because users don’t know how to exploit the potential of the visual component.”

— A passage from a 1992 Network World op-ed by writer James Kobielus, who noted that many companies had invested large amounts of money in cross-office video conferencing technology but had failed to find a way to make it more beneficial than just getting on the phone. And this was at a time when literally no advantages of modern technology were available. Here’s what a typical video conferencing setup looked like back then, per the article: “The typical video conference may involve bridged off-site audio, fixed and mobile in-room microphones, multiple steerable wall cameras, stands for still and action graphics (in which the camera remains on the speaker’s hand as it draws), 35mm slide projectors, video cassette recorders, facsimile machines, electronic white boards, and personal computers.”

/uploads/0310_cuseeme.jpg)

It took a lot of innovation for video conferencing tech to reach mainstream mediocrity

Sure, conference call technology still kind of sucks at the office in the year 2020, but it’s worth pointing out that we’ve come quite a long way in the process. Even as video conferencing tech remains frustratingly imperfect, it nonetheless reflects our best efforts to chip away at a problem over four decades.

To be clear, video conferencing hardware has come a long way since it first appeared in workplaces starting in the 1980s. It used to be a six-figure purchase for many companies, with individual calls costing significantly more per hour (as much as $1,000) as a person’s salary at the time.

(Perhaps reflecting this, many early video distribution efforts might have been handled through a one-to-many approach, reliant on the same technologies as cable or satellite television. Not as interactive, but it worked.)

On the plus side, video conferencing tech improved greatly and its price lowered exponentially in just a few years. In 1982, when Compression Labs first released its first video conferencing system, it cost $250,000, though those costs fell quickly, and by 1990, it was possible to get a system up and running for as low as $30,000, and by 1993, less than $8,000.

But video conferencing came with a lot of give-and-take. T1 lines handled about 1.5 megabits per second of streaming, roughly the bandwidth needed for a single 480p YouTube video, and in the 1980s cost thousands of dollars to acquire. (Due to their multi-channel design that was intended as the equivalent of 24 separate phone lines, T1 lines remain very expensive today despite being completely outpaced by fiber.)

/uploads/0310_compression.png)

This eventually led to versions that used less-powerful networks for video conferencing, such as the Compression Labs’ Rembrandt 56, which relied on ISDN lines that often maxed out at 56 kilobits per second at the time. Those $68,000 systems, which benefited from Compression Labs’ innovations in video-compression technology, touted costs to consumers of only around $50 per hour. (Side note: If you were a telecommuter in the 1980s or 1990s, you were probably using ISDN.)

As you might imagine given that information, video conferencing mostly thrived in government contexts early on, and much of the early research work on the viability of such technology comes from defense research.

“Video compression involves an inherent tradeoff between picture resolution and motion-handling capability,” explains a 1990 thesis, produced by a Naval Postgraduate School student, offering video conferencing recommendations for the Republic of China Navy. “Picture quality depends on the transmission bandwidth. As the bandwidth is reduced, more encoding is required and picture quality is poorer.”

It’s worth pointing out that video conferencing was an important element of improving image compression technologies throughout the 1980s. In fact, Compression Labs—responsible for many of those early innovations—became a source of controversy in the 2000s after it was purchased by a new owner who attempted to use a patent on a compression algorithm used in the JPEG format to sue major corporations. The patent troll settled with many major technology companies in 2006 after a court found the patent only covered video uses.

Consumer-grade video conferencing technology—a.k.a. webcams—appeared starting in 1994 with the Connectix Quickcam, a device I am intimately familiar with. Costing around $100 or so, it worked poorly on early PCs due to limited bandwidth offered by parallel ports and other early gadgets, but led to decades of innovation afterwards. (The USB and Firewire ports—the latter used for the iSight camera Apple sold during the early 2000s—offered up bandwidth needed for standard consumers to at least shoot video, if not distribute it over the internet.)

And in the corporate arena a number of key innovations were still taking place. In 1992, the company Polycom released its first conference phone, a multi-pronged calling device designed to handle multiple people talking in a room at once, that has been a mainstay of offices for three decades. And a few years later, the company WebEx, which was later acquired by Cisco, developed some of the earliest internet-enabled video conferencing technology, much of which is still used today.

Software technology for video transmission greatly improved in the early 2000s with Skype, whose ability to handle high-quality video and audio conversations over peer-to-peer network nodes proved a key innovation in conferencing technology, though the company eventually moved away from a peer-to-peer approach to a “supernode” approach after being acquired by Microsoft.

By the mid-2000s, webcams became common components in laptops and desktops—an idea proposed as far back as Hewlett Packard’s Concept PC 2001—with some of the earliest computers with built-in webcams being the iMac G5 and the first generation of the Intel MacBook Pro.

So now, we have all this gadgetry available to us that makes all this stuff happen that lets us connect remotely without too many problems. But why aren’t we happy with it? A few reasons.

1.5Mbps

The recommended amount of bandwidth needed for a high-definition Skype video call. (For those playing at home, that’s the same amount of bandwidth as a T1 line pumps out.) Phone calls over the Microsoft-owned VoIP only need about 100kbps to work efficiently, but are often competing with other things on your machine or within your network. If you have a torrent or two loaded, that might be a problem.

/uploads/0310_conferencephone.jpg)

Why conference call technology sucks at the office

In a lot of ways, to answer the question of why video conferencing still sucks despite all the innovation we’ve thrown at it over the years requires us to step back and consider the fact that we’re often trying to do a lot at once with video on inconsistent ground.

We have all this innovation in front of us, but that innovation is competing with more resources than ever.

If you’re sitting at home with your laptop in front of you, but you have a 100-megabit fiber optic connection, odds are that, unless you’re streaming a video game in the next room and downloading 15 torrents at the same time, you’re not the one that is going to have hiccups.

But if you’re in an office with 100 people, and that office is managing multiple video conferences on a daily basis, the result can be extremely stressful on your office’s internet.

For example, here’s a likely scenario that has emerged in the present day: Say an all-hands meeting is taking place one afternoon, but you’re in the middle of a task on deadline, so rather than walking the 50 feet to go to the conference room, you decide to call in. And say that 10 of your coworkers decide to do the same thing. Despite the fact that you’re all in the same giant room, none of these connections are happening locally. You’re connecting to this thing that’s happening on your local network via the internet, meaning that your local network is not only having to pump out lots of extra data externally, but then feed it back in internally as well.

If you work at, say, Google, where a massive network infrastructure has been built out, this may not be such a problem. But for smaller companies, corporate networking can be extremely expensive, costing hundreds or thousands of dollars a month on top of installation costs, and as a result, you may run into headspace issues at some point.

Suddenly, a video conference that was designed to handle people who had to call in remotely is in full-on stutter mode. There’s only so much that compression can do about a full-on stutter-fest. It’s a lot to manage, and even with the best of circumstances, your digital pipe is going to have to handle a lot.

(This might be one of the reasons that your IT guy tells you that you can’t load Dropbox on your work PC.)

But even small moments of latency can create big problems. Ever have it happen where you’re on top of someone when talking? This is something that can happen when a little latency creeps in. And because audio and video are often distributed using different methods (sometimes, even, different devices), they can create sync problems—say situations where the audio is moving faster than the speaker. As the videoconference firm 8x8 notes, 300 milliseconds is an acceptable amount of latency for a video call, but that’s enough lag that two people can still talk over one another at the same time. (Maybe a walkie-talkie approach is needed?)

An unsolicited shout-out for a cool tool

In my multiple years of remote work, I’ve tried a lot of things that I mention in this piece, including various videoconferencing tools, noise-canceling headphones, and different strategies for ensuring you’re not degrading the quality of your call.

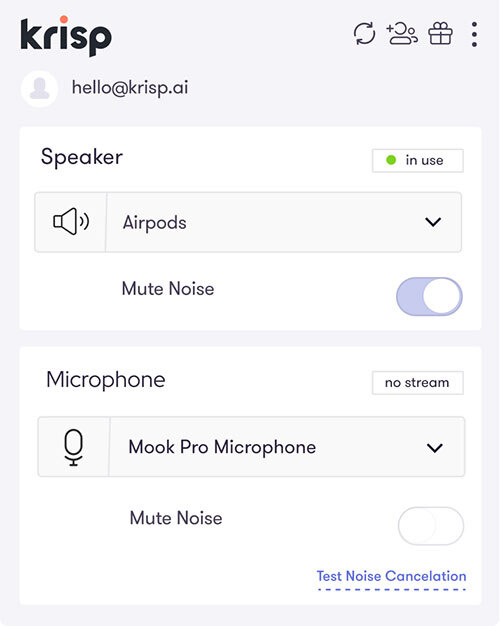

But I just wanted to give a major shoutout to Krisp, a tool I’ve personally used for more than a year to make conference calls bearable. By using artificial intelligence, it convincingly removes background noise from calls on all sorts of platforms, and is sort of a secret weapon to ensure that any setting can actually offer up good call quality.

Anyway, if you want to try Krisp, they’re offering discounts and other benefits amid the COVID-19 outbreak, where, let’s face it, this kind of thing is going to come in handy at this time. Use our affiliate link to sign up and support Tedium during a strange time. They didn’t ask me to do this. I just felt like they were quite worthy of a big shout-out in this issue on conference call tech.

/uploads/0310_webcam.jpg)

Why conference call technology sucks outside of the office

But even if you’re at home or on the go, there are still likely going to be issues that prevent you from getting the best experience possible with audio and video on the go, and likely, they aren’t going to be strictly bandwidth-related.

Some of it will have to do with noise, some with camera quality, some with the choices you make as far as audio goes.

On the camera quality front, an interesting phenomenon has emerged in recent years: Basically, we have an interest in increasing camera quality, but at the same time cutting down on bezel size—which means we have less room for better cameras. This has basically given webcams no place to go—either forcing a shrinking of the technology or attempts to move it that have proven more controversial than helpful. Most infamously, the Huawei Matebook Pro’s decision to put the camera under a pop-up key proved to be the most controversial element of an otherwise well-reviewed laptop.

Coming at a time when camera fidelity is seen as increasingly important, it means that laptop webcams are significantly worse than their mobile equivalents—the 16-inch MacBook Pro, for example, uses a 720p webcam at a time when many recent smartphones can reasonably shoot at 4K quality from their front-facing cameras. Even if your stream can’t handle full resolution, odds are you’ll get better results if you shoot at a less compressed rate.

Speaking of compressed rates, the recent push to remove headphone jacks from everything has created a pretty significant problem when it comes to taking conference calls. To put it simply: Most versions of Bluetooth have traditionally had pretty crappy audio quality when using a microphone. This is due to a specific problem with the Bluetooth spec for most of its history: Due to the spec’s design, you can’t get high-quality audio while using a microphone due to a bandwidth crunch—one that is worsened if your device is hooking up to a 2.4 gigahertz WiFi network, which shares its frequency with Bluetooth uses.

In fact, the microphone quality degrades significantly when using it on a Bluetooth headset. And while Bluetooth 5.0 can deliver more bandwidth for a potentially better headset mic experience, that is still fairly uncommon in all but the latest PCs and smartphones. The reality is that if you’re trying to join a video conference, you should just spring for wired headphones, possibly even ones that connect via USB. I’ve found even high-end noise-cancelling wireless headphones with ambitious microphone setups, such as the Sennheiser PXC 550, problematic for video conference use. (These days, I use the much ballyhooed Sony WH-1000XM3 headset, but despite all the work put into the microphone on those, I plug it into a headphone cable with a built-in mic when I need to take a call.)

Additionally, using a phone number to call in can have a similar effect on call quality as voice lines tend to be heavily compressed. (It’s the same reason why hold music sounds particularly terrible.) If given the choice between dialing in or connecting through an app, in most cases you should favor an app-based connection.

As for sound, it’s worth considering your environment. Echoey room? Perhaps not the best for a conference call. And while a coffee shop may make you more creative, you might be pulling out your hair trying to find a quiet environment for chatting (as well as a fast-enough internet connection, because business-class internet is expensive).

The good news on the background noise front, however, is that technology can help make your microphone more resistant to the sounds of Frappuccino blenders in the room. Krisp—an app for MacOS, Windows, and iOS—convincingly gets rid of many background noises found in noisy environments by using artificial intelligence. While not free, it can give you or your team a lot of flexibility on the road. There is also a somewhat effective option for Linux users as well, as a setting in the pulseaudio tool can be turned on that can remove background noise from recordings in real time. (It’s not as good as Krisp, but it’s free!)

A similar innovation on the video front, background blur, is available in Microsoft’s primary video chat tools, Teams and Skype.

Soon enough, you’ll be able to take part in a video conference at Starbucks just as good as at home—as long as you’re not under a quarantine.

But even if you get the right headphones, cancel all the noise around you, find the perfect room, and do everything else right, there’s no getting past the fact that everyone else around you has to take the initiative to create a good video conference experience as well. And if one person decides to do a web conference with a busy highway in the background, connects via the world’s worst wireless network, can’t figure out where the mute button is or chooses not to change out of their pajamas (or worse), the whole experience is destined to go downhill, fast. And at a time when people are being asked to work from home, there may not be a Plan B.

It’s in many ways an issue more cultural than technical, but it can be explained using a term more closely associated with cybersecurity than all-hands meetings: The attack surface. The more people on the call, the bigger the attack surface, and the higher potential for one weak link to ruin the whole call.

Tools like the ubiquitous Zoom have mechanisms for event hosts to mute or hide particularly disastrous conference call fails, but honestly, you’re in a professional environment—and the approach in some cases may come down to management.

On a call, every person is a potential point of failure—so, as much as is possible, it’s on them to ensure that they’re not ruining it for everyone else.

Look, let’s be honest with ourselves here. While video conference software is not perfect, it has come a long way and is now basically good enough to allow people to work from home regularly and with little impact to their work.

A great example of this is the fact that e-learning, which effectively works as the education version of video conferencing, is seen as so effective that it has effectively ended the practice of snow days in some school districts. (However, not all—as EdWeek notes, the tool is not available in many districts, which means the benefits will be in somewhat of a have-have not situation, just as K-12 education often is in general.)

Given everything we’ve been able to do with internet access and the huge amount of data we’re shipping around from place to place, it’s impressive any of this stuff works as well as it does. And there is a lot of compressing and quality degradation that needs to happen to allow for that video conference to happen.

So you may have to repeat yourself every so often. Perhaps, instead, we should be impressed that your coworkers can hear you most of the time.

--

Find this one an interesting read? Share it with a pal! And I definitely recommend you give Krisp a try.

:format(jpeg)/uploads/tedium032420.gif)

/uploads/tedium032420.gif)

/uploads/ernie_crop.jpg)