Today in Tedium: My favorite sound in computing is one that I haven’t actually had to use on a computer in nearly 20 years. The modem was a connection to a world outside of my own, and to get that connection required hearing the sounds of a loud, abrasive handshake that could easily be mistaken for Lou Reed’s Metal Machine Music. I’d like to compare it to another kind of sound for a little bit—the noise of a “straight key” used for a telegraph. Both technologies, despite more than a century in age difference, seemingly turned data into sound, then into electrical pulses, and back into sound again. It’s no wonder, then, that you can actually trace the roots of the modem back to the telegraph, and later the teletype machine. Data and wires, simply put, go way back. And it’s not the only example of the telegraph’s quiet influence on modern computing. Today’s Tedium draws a line between the modern computer and the pulses that inspired it. — Ernie @ Tedium

Today’s GIF is from the movie WarGames, one of the few films to show off the acoustic coupler-style modem.

News Without Motives. 1440 is the daily newsletter helping 2M+ Americans stay informed—it’s news without motives, edited to be unbiased as humanly possible. The team at 1440 scours over 100+ sources so you don't have to. Culture, science, sports, politics, business, and everything in between—in a five-minute read each morning, 100% free. Subscribe here.

A telegraph key. (Matthew Boyle/Flickr)

The roots of the modem can be found in the telegraph

As noted above, the modem, at least in its telephone-based forms, represents a dance between sound and data. By translating information into an aural signal, then into current, then back into an aural signal, then back into data once again, the modulation and demodulation going on is very similar to the process used with the original telegraph, albeit done manually, where information was input by a person and translated into electric pulses, where another person would receive the result.

Modems work the same way, except no additional people are needed on the other end.

And considering that context, it makes sense that, for a time, the evolution of data transmission and voice transmission were on a parallel path. You could make the case that, before Alexander Graham Bell came up with the telephone, he was effectively working on a modem of sorts. Here was the problem that he was working on before the first phone call was made: The telegraph system, with its limited communication style of dots and dashes, limited the amount of information that could be transmitted at once. In the mid 1870s, Bell was exploring the idea of something he called a “harmonic telegraph,” which allowed for multiple pieces of data to be sent at once, as long as the sounds being created had a different pitch.

Bell wasn’t the only one working on an idea of this nature—other inventors were toying with the idea of using harmonics to send multiple telegraph messages at once, including Western Electric founder Elisha Gray—who notably was a major personal competitor of Bell.

There’s a lot of story in there, including a conflict about ownership that makes Bell look like a thief—I’ll let someone else tell it—but long story short, the concept of the harmonic telegraph being used to send data through electric lines had been overshadowed by the fact that voice could be sent in the same way.

The idea of improving data transmission never faded, however, and by the early 20th century was improving by leaps and bounds in a more sophisticated form: The telegraph gained the ability to multiplex, or spread multiple pieces of information at once on the same line. This ability particularly proved important in newspapers, which became increasingly reliant on wire services around the country. Per the Encyclopedia of American Journalism:

Telegraph news became such a pervasive presence that it transformed journalism beyond the big cities. Many small-town papers increased their frequency to daily production once they received a steady diet of telegraph news. The distribution of news by telegraph also gave rural newspapers an edge in competing with city publications; they obtained news by wire and published it for local readers before city papers with similar reports could circulate in the countryside.

Helping with this was a device called the teletype, that could not only distribute messages, but also send them. This idea evolved from the 1869 creation of the stock ticker, which was one of the first devices that used telegraph technology to produce text.

A Telex machine. (ajmexico/Flickr)

Eventually, the teletype allowed for the primitive distribution of a limited data set using a limited variant of binary code known as the Baudot code, or the International Teleprinter Code. This five-bit binary code effectively built upon the basic ideas of Morse code and allowed for the distribution of a full character set. It can best be described as the predecessor of ASCII, which was developed from this telegraph code and became the bridge technology for more modern encoding technologies such as the modern Unicode standard.

While not exactly the same as the data that gets pushed through our internet in the modern era, it borrows from many of the same concepts, and the lineage is clear. It’s not the only way in which telegraph/teletype technology clearly inspired computing, either.

45.45

The generally accepted speed of the teletype network in the United States in baud (or data channel speed). This speed was equivalent to 60 words per minute. Other parts of the world were slightly faster, however—in Europe, the generally accepted speed was 50 baud, or around 66 words per minute. While initially used to communicate directly between devices, the use of teletype eventually evolved into the Telex network, which effectively made it possible to distribute data through a telegraph wire or via the radio. Telex launched in Europe in the early 1930s, and AT&T launched its own version of the network starting in 1931.

The RS-232 serial port, a wildely used computing port that can interface with some teletype machines. (Seth Morabito/Flickr)

The teletype directly influenced early computer connectivity and an iconic operating system

When people were plugging modems into their computers for the first time, they were generally doing so through an RS-232 serial port, a port that was effectively a bridge between the past and what was then the present.

First introduced in 1960 by the now-defunct Electronic Industries Association, was first released into the wild, the RS-232 standard is fundamental to the growth of the modem, as it set out a standardized way for a data terminal to communicate with a communications device.

It also set the basic appearance and approach for the serial port, the most common external expansion port before the rise of USB. (The standard is paywalled, but the Internet Archive has a document dating to the 1980s from the National Bureau of Standards that covers the same territory, if you feel like reading details about an outdated technical standard.)

But it’s worth noting that RS-232 was designed for the machines that were common at the time of its creation—teletypes, which effectively worked akin to terminals to some degree, except with ink instead of pixels. It’s weird to think about in our screen-friendly world, but before screens were the norm, we used paper, and teletypes were our dumb terminals. (Case in point: When monitors first came out, they were called “glass teletypes.”)

The lineage of the RS-232 port is highlighted by what preceded it. Before the first serial port, the interface style generally used with teletypes was something called a current loop interface, which relied on current to push data through wires, rather than voltage, which tends to be the way data is distributed through wires in the modern day. You can actually still buy a converter that turns RS-232 serial signals into current loop signals.

(I would pay money to see someone convert HDMI to USB-C to USB-A to PS/2 to RS-232 to current loop and then plug it into a teletype, just to see what happens. That’s my idea of fun.)

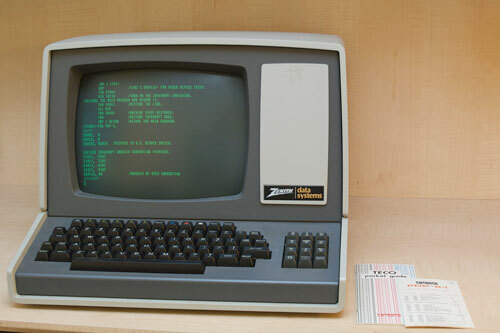

A Zenith Z19 “dumb” terminal, which in some ways was an evolution of the teletype. The device used an RS-232 serial interface. (ajmexico/Flickr)

This kind of relationship between the teletype and the computer also showed itself in another important way—in UNIX, the operating system on which most other modern operating systems are based.

The structure of UNIX, a piece of software originally designed for AT&T, was directly inspired by the continued existence of the teletype at the time, even though the computer was seemingly designed to replace it. As UNIX evolved into something of a philosophical basis for operating systems, this structure never went away. A 2008 blog post from software engineer Linus Åkesson highlights how deeply AT&T’s legacy association with the teletype directly inspired the operating system:

In present time, we find ourselves in a world where physical teletypes and video terminals are practically extinct. Unless you visit a museum or a hardware enthusiast, all the TTYs you’re likely to see will be emulated video terminals—software simulations of the real thing. But as we shall see, the legacy from the old cast-iron beasts is still lurking beneath the surface.

There’s a lot there, too much to summarize, but let’s put it this way: If you write “tty” into a terminal screen on a UNIX-based operating system like Mac OS or Linux, it will list the name of the terminal device being used by the current system.

TTY stands for teletype, and the teletype eventually evolved into the terminal emulator—the same one that’s probably easily accessible on your computer right now.

Beyond the terminal, of course, the teletype made an even more direct imprint on the computer—thanks to an inventor who was trying to get around a monopolistic telecom giant.

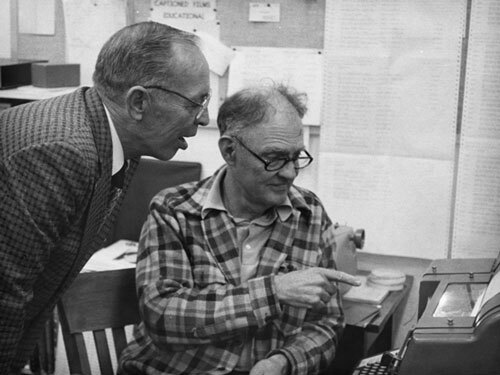

Robert Weitbrecht, showing off his acoustic coupler-based modem. (via Gallaudet University)

How the needs of Deaf culture—along with the teletype—helped shape the modem

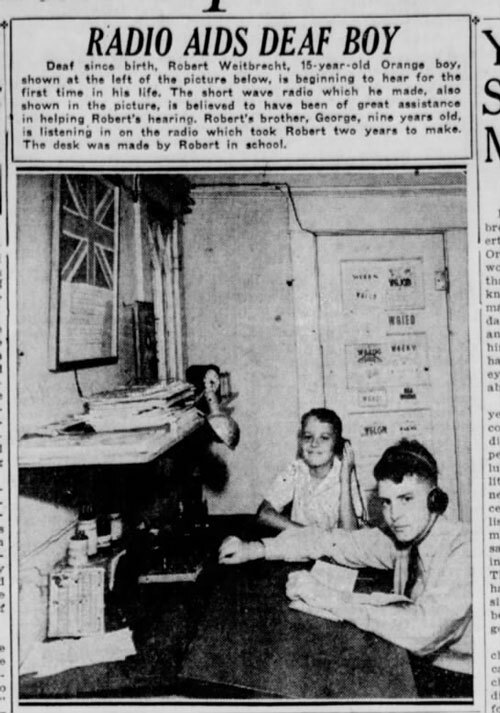

Robert Weitbrecht, who was born Deaf, knew early on of the benefits of technology and how they could help him better connect with the world—and get past his disability.

At the age of 15, he had built his own radio, which was believed to help him with his hearing and was considered an impressive enough feat that it was featured in his local newspaper. His curiosity would follow him throughout his life—especially through inventions that helped fellow Deaf people communicate.

Weitbrecht, in a newspaper article dating to his teen years. (Santa Ana Register/Newspapers.com)

Weitbrecht eventually worked his way into a role with the Stanford Research Institute, a university-affiliated facility that played important roles in the inventing of numerous technologies, including ERMA, an early check processing computer I wrote about a while back. Weitbrecht’s role at the institute eventually put him in a position where he invented a device that would prove fundamental to bringing interactive computing into the home: the acoustic coupler.

Most people may not be aware of this device, which was already out of style by the time that the average person got a chance to get on the internet using a modem for the first time. But the acoustic coupler, which effectively converted audio noises from a telephone receiver into data, was an important evolution of traditional teletype technology, which used a separate network to communicate from the phone system.

Weitbrecht’s invention essentially made it possible to bring the basic general capability of a teletype machine into homes, where Deaf individuals could use the devices to communicate on the same lines that hearing people had been able to with the telephone for nearly a century prior.

Now, you might be wondering, why didn’t he just create a simple box to do this, rather than going through the additional trouble of an acoustic coupler? That thing added a whole bunch more parts for the simple purpose of making the noises come through an actual phone; technically, it shouldn’t have been necessary, right?

Well, part of the problem here was regulatory in nature, not just technical. See, at the time Weitbrecht was developing the acoustic coupler, AT&T had a tight grip on the way its network could be used—and legal precedent had not caught up with the available technology. A 1968 decision called the Carterfone decision, which I referenced in this piece about cell phone ringtones, would allow outsiders to make devices for the phone network that weren’t approved by AT&T.

But Weitbrecht didn’t have that advantage at the time. Per A Phone of Our Own: the Deaf Insurrection Against Ma Bell, a book about early efforts to build a TTY system, Weitbrecht spent significant amounts of time trying to come up with a solution to the problem that would avoid conflict with the phone giants:

Weitbrecht faced several obstacles as he set out to develop the telephone modem. First, the telephone modem could not be connected directly to telephone company equipment. The telephone companies were strict about “foreign attachments.” They were concerned that when a customer connected another device directly to the telephone lines, there might be electrical interference with the company’s signals. AT&T’s restrictions on direct connections frustrated Weitbrecht’s attempt to find solutions. He knew that a direct connection to the phone line would reduce garble in the TTY messages. But anyone who attempted a direct connection ran the risk of having telephone services stopped. In an attempt to satisfy AT&T, Weitbrecht spent years conducting experiments with a modem that avoided a direct connection.

This led to Weitbrecht’s Eureka moment with the acoustic coupler, which effectively converted the sounds of data, pushed through phone lines, into audio, then back into data. There were limitations in terms of how fast this kind of connection could be, of course—this added step of conversion created a point of degradation—and, for much of its history, the acoustic coupler modem topped out at a mere 300 baud, only later seeing an upgrade to 1200 baud.

Despite the Carterfone decision legally making it so that modems did not need to go through this extra step, the acoustic coupler remained in use for a number of years, in part because connector standards weren’t put into place until the late 1970s. Those RJ11 jacks that you plug into phones today, while invented in the 1960s, did not come into standard use until after Jimmy Carter (who had no affiliation with the Carterfone, by the way) was president and regulatory precedent made something like them necessary.

(It’s arguable that the RJ11 connector was something of a prelude to the breakup of Ma Bell—the antitrust suit that eventually led to the “Baby Bells” was taking place at the time the FCC implemented the RJ11 standard.)

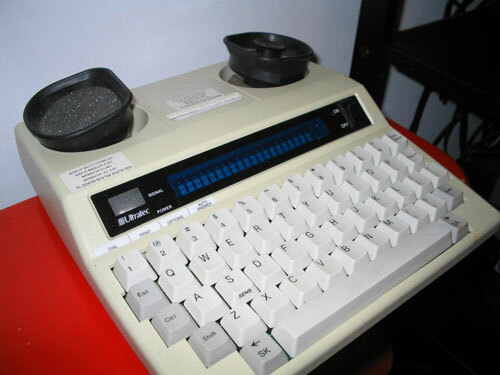

A teletype machine of the type Weitbrecht helped to popularize. (Sclozza/Wikimedia Commons)

Nonetheless, the acoustic coupler and its attached modem was a revelation, and its speed limitations were not a problem for the original intended use case. Weitbrecht and his fellow inventors, James C. Marsters and Andrew Saks, were able to retrofit old teletype machines to be used over the phone system, creating a new use case for the telephone system that opened it up for use by Deaf individuals. Later iterations came with the acoustic coupler built right in—and some of which are even sold by Weitbrecht Communications, a company, founded by Weitbrecht and Marsters, that to this day sells TTY devices to the Deaf community.

By the time I first got to use a 2400 baud modem with a computer, this technology was already very mature, and my dial-up terminal programs of the day could actually still technically dial the same teletype machines that saw wide use within the Deaf community. The modem reached that point of maturation thanks in no small part to a couple of inventors who saw a way to extend the life of the teletype in a valuable way.

Looking at a dim terminal screen, staring at stark green letters or dull gray characters, remains a preferred way of directly communicating with our computers, despite the later success of the graphical user interface and the touch screen.

The first question someone staring at a screen like this for the first time might have is, “What do I do next?” Rarely do we consider the question of “How did we get this?”

Perhaps we should, at least a little bit more. While it would be a stretch to call a telegraph or a teletype the direct inspiration for a world that gave us the smartphone or augmented reality, the truth is that modern computers share more lineage with pre-computing technology than we give them credit for.

We may not discuss our connectivity speed in baud anymore—not when we can measure data by the gigabits per second—but these points of evolution have clearly had an impact on what we, as modern computer users, actually got to use at the end of the day.

A trackpad is, of course, infinitely more functional than a straight key, but if you squint hard enough, maybe you’ll see the resemblance.

--

Find this on an interesting read? Share it with a pal!

And thanks again to 1440 for sponsoring.