Having thought about it, I feel my stance on AI is perhaps not as negative as some of my peers. I think it’s overhyped, sure, but I’m not in full doomer mode. In a narrow context, AI has real benefits.

The problem is, the people implementing and making the technology have let the hype outpace the potential benefits of the technology, while implementing it in ways that clearly undermine underlying systems—and norms. (I hear the CEO of Zoom has 50 meetings today.)

Adobe’s a great example. The company exists to serve creative people, but has recently focused on products apparently designed not for creativity (where I saw some potential early on) but for optimizing the role of creative output so humans are less necessary. AI has been sold as a tool for optimization, for extracting value, when it should be sold as a tool to build upon our creativity, and the average user is very quickly figuring this out.

Want a byte-sized version of Hacker News? Try TLDR’s free daily newsletter.

TLDR covers the most interesting tech, science, and coding news in just 5 minutes.

No sports, politics, or weather.

Recently, a debate has cropped up in journalistic circles that highlights the way tech firms push large language models to replace our creativity, rather than build upon it. Last month, Perplexity AI launched a feature called Pages that promised to be “your new tool for easily transforming research into visually stunning, comprehensive content.”

Essentially, it is a tool for writing miniature book reports about what you’ve been researching. It is a robot that makes the SEO-traffic-generating pages itself. Which sounds like the AI replacing the work of actual people, and extracting their value in the process.

This was bad enough, but then an editor at Forbes noticed that, a mere week after this feature launched, the company had taken a long, paywalled report about former Google CEO Eric Schmidt’s interest in drones, and essentially rewrote it, with the credit buried in a way that would discourage people from clicking further. And that piece got a lot of traffic—little, if any, went to Forbes.

Debates about hiding sourcing in aggregation aren’t new. As I wrote about five years ago, modern-day journalistic gadfly Michael Wolff upset fellow journalists with his aggregation site Newser, which made little effort to link its sources and ultimately extracted real reporting resources to support its own traffic.

In many ways, Perplexity has invented an automated version of circa-2010 Newser. And Forbes was pissed. Perplexity CEO Aravind Srinivas tried to defend what the company did, writing, “It has rough edges, and we are improving it with more feedback.”

But Forbes isn’t having it, sending a formal legal threat to the company this week.

Now to be clear, aggregation was always something of an ethical black hole. Many of your favorite writers started with aggregation, this writer included. Blogging, at its heart, is taking information you found elsewhere—through other links, via experiences, and elsewhere—and aggregating it to your readership. Sites like Gawker gained most of their traffic through aggregation, but they did so with style.

Journalists who aggregate—at least the ones who care about their jobs—tend to know the limits to what they can take, as well as the role that their individual perspective brings to the content. (Some might point out that Forbes spent the 2010s focused less on its own quality work than on similar race-to-the-bottom endeavors, but the report taken by Perplexity’s book-report engine was very much not that.)

Aggregation can be done well, and creatively, in a way that benefits both the developer of the aggregation and the reporting. I’d like to think my old site ShortFormBlog, with its prominent “source” links, was additive aggregation at heart.

But what Perplexity AI is doing is not that, and its “rough edges” complaint highlights that point neatly. It is essentially taking information from other sources, re-reporting the information using AI, minimizing outside links, siphoning the traffic, and blunting any criticism of what they’ve built as “rough edges.” It’s not additive—it’s not like the bots are doing interviews, commentating on the report, or highlighting unexpected perspectives. They are literally reporting the thing—no more, no less.

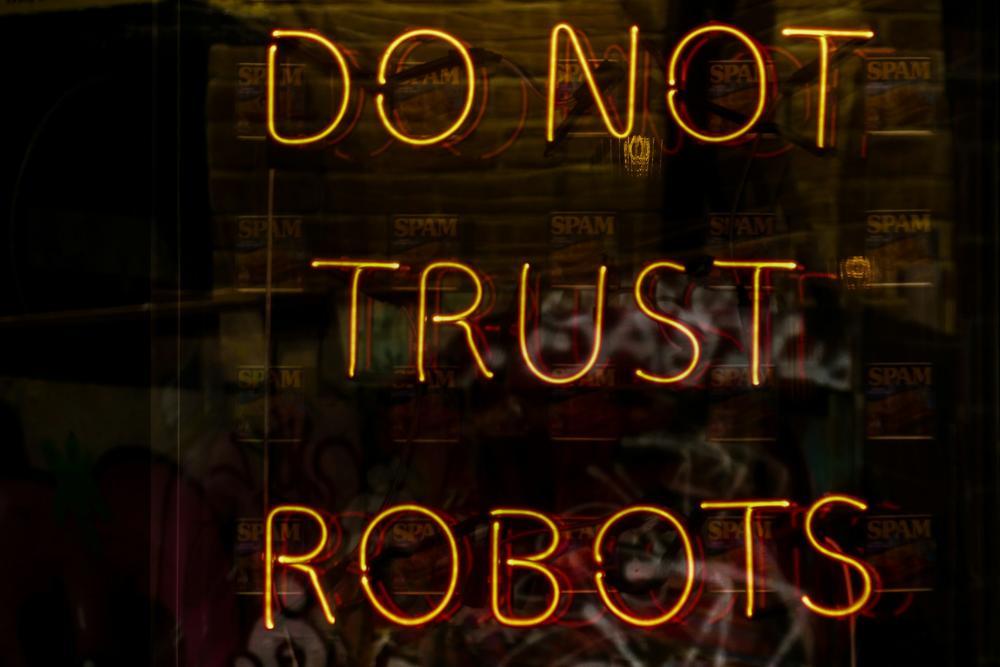

Despite using large-language tools built to generate content, it doesn’t make anything. In fact it exploits the guardrails of the internet, per Wired—the tool doesn’t even respect the guardrail of robots.txt.

Admittedly, the whims of the algorithm also stymie human writers, too. As friend of Tedium Jason Koebler wrote at 404 Media, our tendency to get caught up in whatever Elon Musk is doing shows human journalists’ tendency to lean on low-hanging fruit traffic built on the thinnest of ideas. This stuff gets traffic without a lot of output. As Koebler wrote:

This is not to denigrate the journalists who are writing these articles. Most of them are working in large companies where their bosses’ bosses’ bosses are trying to squeeze the remaining blood out of a programmatic advertising traffic stone that has been near dry for years and threatens to evaporate entirely as AI answers replace normal search results and social media further frays. Most of their bosses’ bosses’ bosses probably hope to replace these human beings who are writing Elon Musk tweets a thing articles with an AI generating Elon Musk tweets a thing articles.

Stuff like Perplexity’s Pages is how we get to the bosses’ bosses’ dream of AI-generated Elon Musk tweets a thing articles. But more importantly, it’s also how we turn the fruit of months-long reporting endeavors into computer-generated articles that produce the information with none of the flair (not even its own flair) and none of the work.

If we’re going to embrace what large-language models do, we should have higher standards or choose not to use it at all.

Human-Generated Links

Jay Hoffman’s excellent The History Of The Web has a great piece on the rise of SEO and the trademark controversy that nearly put the common term in a single person’s ownership.

If, for some reason, you are still unconvinced of the brilliance of Weird Al Yankovic as a satirist and parodist, I present “[This Song's Just] Six Words Long,” which has a chorus that’s seven words long. That’s a direct reference to its source material—George Harrison’s cover of “Got My Mind Set On You,” a song with six words in the title, but seven words in the chorus.

Harry McCracken, a fellow fan of 1994 tech, published an excellent piece about the multimedia CD-ROM’s coming-out party. Encarta was such a trip.

I am starting a quiet campaign to get Threads to support following people in the fediverse. Who’s going to join me?

--

Find this one fascinating? Share it with a pal!

And if you’re looking for a tech-news roundup, TLDR is a great choice. Give ’em a look!